Gaze Gesture Recognition

leveraging gesture recognition to classify gaze scan paths

This work is the finals project from a special topics course I took at the University of Florida called Human-Centered Input Recognition Algorithms. The course provided an overview of the $-family recognizers and broadly prompted us to extend some facet of the $1 recognizer for the final project. This recognizer is an instance-based nearest-neighbor classifier used to distinguish between user inputs. I am expanding this project as my senior capstone at UF to attempt to classify natural user gaze gestures on the basis of scan path shape.

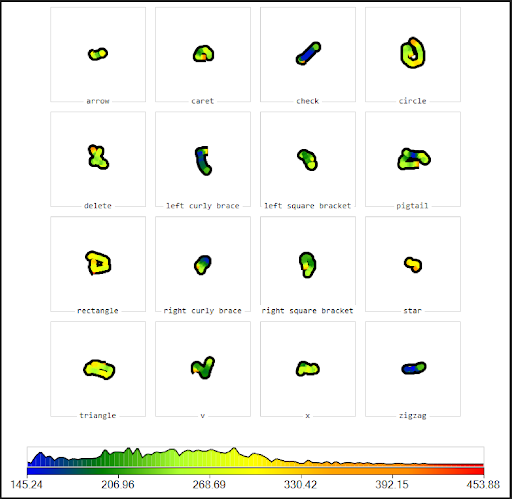

Rather than extending the recognizer’s capabilities itself, this project evaluates its capacity to understand user gestures through a new modality: eye gaze. Gaze is ideal for unistroke gestures, as it is a continuous stream of data (excluding blinks or other moments when the eyes are not visible). This falls within the category of collecting a new dataset. The same sixteen unistroke gestures were performed for each participants, though it is expected that the data collection medium will render significant differences from the original experiment with mouse input.