Gaze-Based Authentication

evaluating gaze-based authentication techniques for nuclear operator training in virtual reality

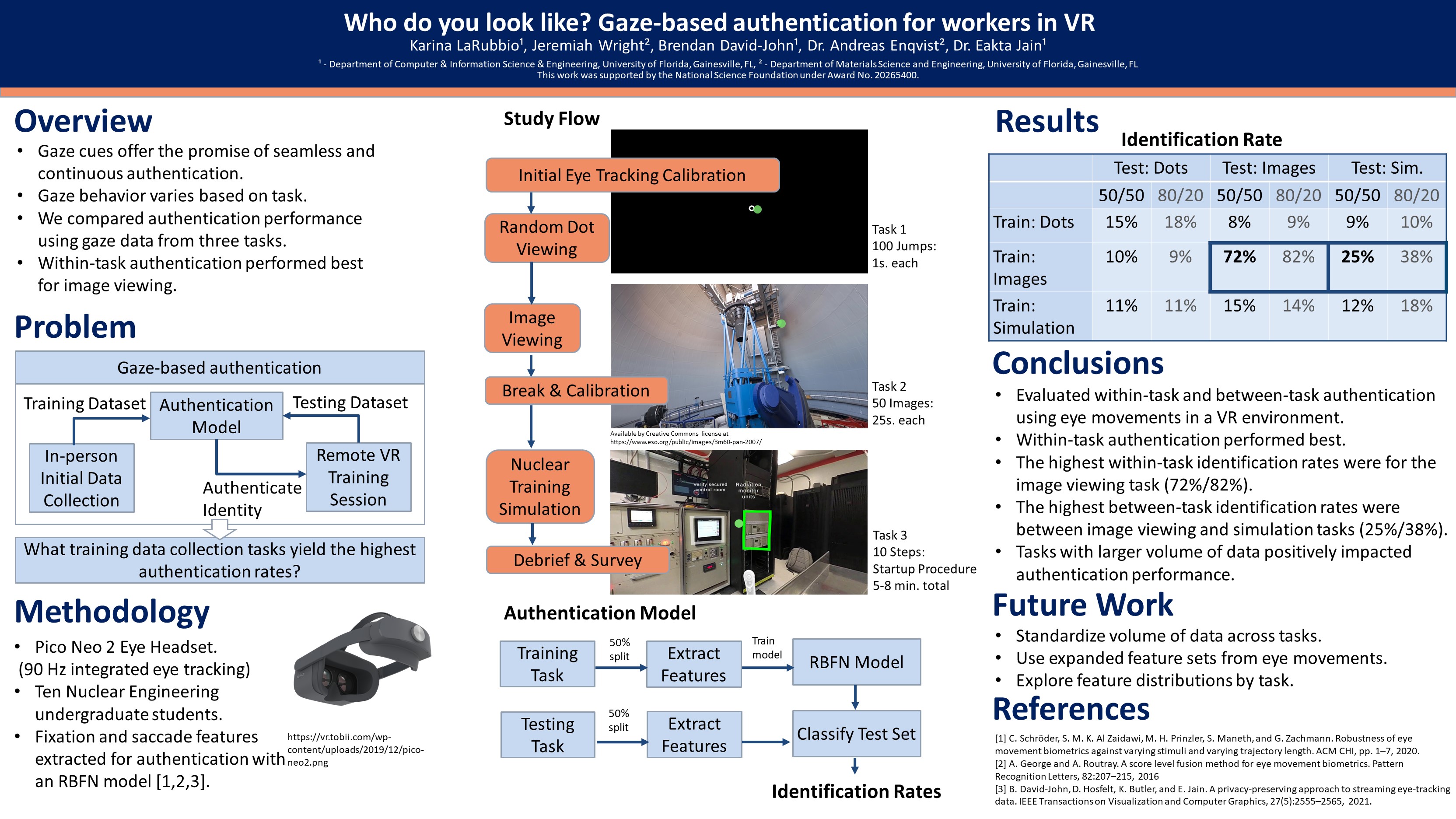

Behavior-based authentication methods are actively being developed for XR. In particular, gaze-based methods promise continuous au-thentication of remote users. However, gaze behavior depends on the task being performed. Identification rate is typically highest when comparing data from the same task. In this study, we compared authentication performance using VR gaze data during random dot viewing, 360-degree image viewing, and a nuclear training simulation. We found that within-task authentication performed best for image viewing (72%). The implication for practitioners is to integrate image viewing into a VR workflow to collect gaze data that is viable for authentication.

I got started on this project in my first year at UF as a part of my University Research Scholars Program Course-based Undergraduate Research experience. We learned about development in Unity for VR and brainstormed on how a VR-based training scenario might be implemented. I continued my involvement through an NSF Research Experience for Undergraduates (REU) the following Summer.

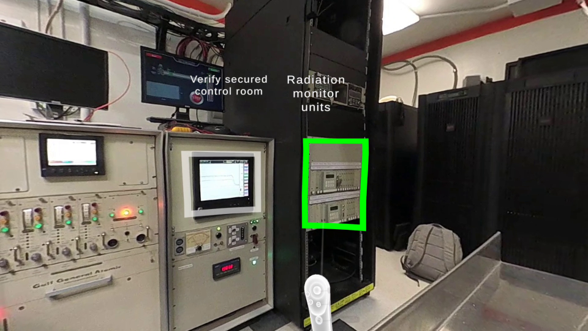

My specific role in this project was to work with our collaborators from nuclear engineering at UF to implement a VR-based nuclear training simulation. I also implemented random dot viewing and image viewing activities, which tracked users’ eye movements throughout to complete our experimental design. I adapted a radial basis function network classifier to take in gaze data from these various tasks and attempt to authenticate users. I went on to lead the production of a short paper and poster for publication at IEEE VR 2022.

For more information about this project, please see our teaser video, poster, GitHub repository, and IEEE page.

K. LaRubbio, J. Wright, B. David-John, A. Enqvist and E. Jain, “Who do you look like? - Gaze-based authentication for workers in VR,” 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 2022, pp. 744-745, doi: 10.1109/VRW55335.2022.00223.